Every time I do an analysis of a customers’ enterprise content management system or network drives, I am surprised about the large number of duplicate files that are found on their systems.

A simple comparison of the content inside the different files always results in an impressive list of files that appear more than once. Frequently, we find percentages of 30% to 50% which are duplicates. Such a comparison is not very informative, unfortunately, because it doesn’t reveal the reason behind the duplication. Sometimes duplicates are intentional, but sometimes they aren’t. In the latter case, potential cleanup operations on a file-by-file basis can result in confusing results and a very lengthy, manual process.

Entire folders copied

Fortunately, in many cases there is more structure. Not only for the documents, but entire folders, including underlying files are often copied entirely to a different place on the content system. This can be detected by comparing files based on content, based on file properties (such as name and owner) or a combination of these and other attributes. The grouping of those properties is inherited by the parent directories, whether or not the characteristics of those parent directories are considered. Regardless of the exact details of the parameters, we see that of the 30% to 50%, sometimes even closer to 80%, are caused by duplicate folder structures.

The explanation

There is a logical explanation for the existence of duplicate folder structures. A copy might be created automatically, in the case of a backup or a restore point. But in most cases, the reason is the lack of a system, like SharePoint Online, to share files and folders between users. When users do not have access to a particular folder, they will save the necessary folder somewhere they do have access to. The result: duplicate folders and duplicate files.

The problem of keeping duplicates is not only it consuming your storage and harms the searchability of your files. It also messes up your knowledge management when you migrate to a modern system. When migrating from file shares to SharePoint, for example, it is probably not intended to bring duplicate files and folders to the new collaboration system. A cleanup operation that removes duplicate files is essential.

Analyze duplicate content on your network drives or content system?

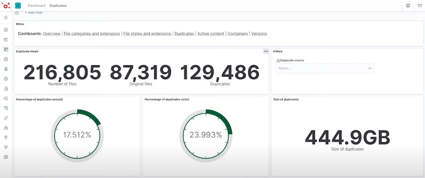

Want to have insights in duplicate content that is stored in your enterprise content management system or on your network drives? Xillio Insights identifies duplicate content and gives much more information on your content so you can make decisions for your migration project.

Xillio Insights gives overview of duplicates files in analyzed system.

No Comments Yet

Let us know what you think